- Docs Home

- About TiDB

- Quick Start

- Develop

- Overview

- Quick Start

- Build a TiDB Cluster in TiDB Cloud (Developer Tier)

- CRUD SQL in TiDB

- Build a Simple CRUD App with TiDB

- Example Applications

- Connect to TiDB

- Design Database Schema

- Write Data

- Read Data

- Transaction

- Optimize

- Troubleshoot

- Reference

- Cloud Native Development Environment

- Third-party Support

- Deploy

- Software and Hardware Requirements

- Environment Configuration Checklist

- Plan Cluster Topology

- Install and Start

- Verify Cluster Status

- Test Cluster Performance

- Migrate

- Overview

- Migration Tools

- Migration Scenarios

- Migrate from Aurora

- Migrate MySQL of Small Datasets

- Migrate MySQL of Large Datasets

- Migrate and Merge MySQL Shards of Small Datasets

- Migrate and Merge MySQL Shards of Large Datasets

- Migrate from CSV Files

- Migrate from SQL Files

- Migrate from One TiDB Cluster to Another TiDB Cluster

- Migrate from TiDB to MySQL-compatible Databases

- Advanced Migration

- Integrate

- Overview

- Integration Scenarios

- Maintain

- Monitor and Alert

- Troubleshoot

- TiDB Troubleshooting Map

- Identify Slow Queries

- Analyze Slow Queries

- SQL Diagnostics

- Identify Expensive Queries Using Top SQL

- Identify Expensive Queries Using Logs

- Statement Summary Tables

- Troubleshoot Hotspot Issues

- Troubleshoot Increased Read and Write Latency

- Save and Restore the On-Site Information of a Cluster

- Troubleshoot Cluster Setup

- Troubleshoot High Disk I/O Usage

- Troubleshoot Lock Conflicts

- Troubleshoot TiFlash

- Troubleshoot Write Conflicts in Optimistic Transactions

- Troubleshoot Inconsistency Between Data and Indexes

- Performance Tuning

- Tuning Guide

- Configuration Tuning

- System Tuning

- Software Tuning

- SQL Tuning

- Overview

- Understanding the Query Execution Plan

- SQL Optimization Process

- Overview

- Logic Optimization

- Physical Optimization

- Prepare Execution Plan Cache

- Control Execution Plans

- Tutorials

- TiDB Tools

- Overview

- Use Cases

- Download

- TiUP

- Documentation Map

- Overview

- Terminology and Concepts

- Manage TiUP Components

- FAQ

- Troubleshooting Guide

- Command Reference

- Overview

- TiUP Commands

- TiUP Cluster Commands

- Overview

- tiup cluster audit

- tiup cluster check

- tiup cluster clean

- tiup cluster deploy

- tiup cluster destroy

- tiup cluster disable

- tiup cluster display

- tiup cluster edit-config

- tiup cluster enable

- tiup cluster help

- tiup cluster import

- tiup cluster list

- tiup cluster patch

- tiup cluster prune

- tiup cluster reload

- tiup cluster rename

- tiup cluster replay

- tiup cluster restart

- tiup cluster scale-in

- tiup cluster scale-out

- tiup cluster start

- tiup cluster stop

- tiup cluster template

- tiup cluster upgrade

- TiUP DM Commands

- Overview

- tiup dm audit

- tiup dm deploy

- tiup dm destroy

- tiup dm disable

- tiup dm display

- tiup dm edit-config

- tiup dm enable

- tiup dm help

- tiup dm import

- tiup dm list

- tiup dm patch

- tiup dm prune

- tiup dm reload

- tiup dm replay

- tiup dm restart

- tiup dm scale-in

- tiup dm scale-out

- tiup dm start

- tiup dm stop

- tiup dm template

- tiup dm upgrade

- TiDB Cluster Topology Reference

- DM Cluster Topology Reference

- Mirror Reference Guide

- TiUP Components

- PingCAP Clinic Diagnostic Service

- TiDB Operator

- Dumpling

- TiDB Lightning

- TiDB Data Migration

- About TiDB Data Migration

- Architecture

- Quick Start

- Deploy a DM cluster

- Tutorials

- Advanced Tutorials

- Maintain

- Cluster Upgrade

- Tools

- Performance Tuning

- Manage Data Sources

- Manage Tasks

- Export and Import Data Sources and Task Configurations of Clusters

- Handle Alerts

- Daily Check

- Reference

- Architecture

- Command Line

- Configuration Files

- OpenAPI

- Compatibility Catalog

- Secure

- Monitoring and Alerts

- Error Codes

- Glossary

- Example

- Troubleshoot

- Release Notes

- Backup & Restore (BR)

- Point-in-Time Recovery

- TiDB Binlog

- TiCDC

- Dumpling

- sync-diff-inspector

- TiSpark

- Reference

- Cluster Architecture

- Key Monitoring Metrics

- Secure

- Privileges

- SQL

- SQL Language Structure and Syntax

- SQL Statements

ADD COLUMNADD INDEXADMINADMIN CANCEL DDLADMIN CHECKSUM TABLEADMIN CHECK [TABLE|INDEX]ADMIN SHOW DDL [JOBS|QUERIES]ADMIN SHOW TELEMETRYALTER DATABASEALTER INDEXALTER INSTANCEALTER PLACEMENT POLICYALTER TABLEALTER TABLE COMPACTALTER TABLE SET TIFLASH MODEALTER USERANALYZE TABLEBACKUPBATCHBEGINCHANGE COLUMNCOMMITCHANGE DRAINERCHANGE PUMPCREATE [GLOBAL|SESSION] BINDINGCREATE DATABASECREATE INDEXCREATE PLACEMENT POLICYCREATE ROLECREATE SEQUENCECREATE TABLE LIKECREATE TABLECREATE USERCREATE VIEWDEALLOCATEDELETEDESCDESCRIBEDODROP [GLOBAL|SESSION] BINDINGDROP COLUMNDROP DATABASEDROP INDEXDROP PLACEMENT POLICYDROP ROLEDROP SEQUENCEDROP STATSDROP TABLEDROP USERDROP VIEWEXECUTEEXPLAIN ANALYZEEXPLAINFLASHBACK TABLEFLUSH PRIVILEGESFLUSH STATUSFLUSH TABLESGRANT <privileges>GRANT <role>INSERTKILL [TIDB]LOAD DATALOAD STATSMODIFY COLUMNPREPARERECOVER TABLERENAME INDEXRENAME TABLEREPLACERESTOREREVOKE <privileges>REVOKE <role>ROLLBACKSAVEPOINTSELECTSET DEFAULT ROLESET [NAMES|CHARACTER SET]SET PASSWORDSET ROLESET TRANSACTIONSET [GLOBAL|SESSION] <variable>SHOW ANALYZE STATUSSHOW [BACKUPS|RESTORES]SHOW [GLOBAL|SESSION] BINDINGSSHOW BUILTINSSHOW CHARACTER SETSHOW COLLATIONSHOW [FULL] COLUMNS FROMSHOW CONFIGSHOW CREATE PLACEMENT POLICYSHOW CREATE SEQUENCESHOW CREATE TABLESHOW CREATE USERSHOW DATABASESSHOW DRAINER STATUSSHOW ENGINESSHOW ERRORSSHOW [FULL] FIELDS FROMSHOW GRANTSSHOW INDEX [FROM|IN]SHOW INDEXES [FROM|IN]SHOW KEYS [FROM|IN]SHOW MASTER STATUSSHOW PLACEMENTSHOW PLACEMENT FORSHOW PLACEMENT LABELSSHOW PLUGINSSHOW PRIVILEGESSHOW [FULL] PROCESSSLISTSHOW PROFILESSHOW PUMP STATUSSHOW SCHEMASSHOW STATS_HEALTHYSHOW STATS_HISTOGRAMSSHOW STATS_METASHOW STATUSSHOW TABLE NEXT_ROW_IDSHOW TABLE REGIONSSHOW TABLE STATUSSHOW [FULL] TABLESSHOW [GLOBAL|SESSION] VARIABLESSHOW WARNINGSSHUTDOWNSPLIT REGIONSTART TRANSACTIONTABLETRACETRUNCATEUPDATEUSEWITH

- Data Types

- Functions and Operators

- Overview

- Type Conversion in Expression Evaluation

- Operators

- Control Flow Functions

- String Functions

- Numeric Functions and Operators

- Date and Time Functions

- Bit Functions and Operators

- Cast Functions and Operators

- Encryption and Compression Functions

- Locking Functions

- Information Functions

- JSON Functions

- Aggregate (GROUP BY) Functions

- Window Functions

- Miscellaneous Functions

- Precision Math

- Set Operations

- List of Expressions for Pushdown

- TiDB Specific Functions

- Clustered Indexes

- Constraints

- Generated Columns

- SQL Mode

- Table Attributes

- Transactions

- Garbage Collection (GC)

- Views

- Partitioning

- Temporary Tables

- Cached Tables

- Character Set and Collation

- Placement Rules in SQL

- System Tables

mysql- INFORMATION_SCHEMA

- Overview

ANALYZE_STATUSCLIENT_ERRORS_SUMMARY_BY_HOSTCLIENT_ERRORS_SUMMARY_BY_USERCLIENT_ERRORS_SUMMARY_GLOBALCHARACTER_SETSCLUSTER_CONFIGCLUSTER_HARDWARECLUSTER_INFOCLUSTER_LOADCLUSTER_LOGCLUSTER_SYSTEMINFOCOLLATIONSCOLLATION_CHARACTER_SET_APPLICABILITYCOLUMNSDATA_LOCK_WAITSDDL_JOBSDEADLOCKSENGINESINSPECTION_RESULTINSPECTION_RULESINSPECTION_SUMMARYKEY_COLUMN_USAGEMETRICS_SUMMARYMETRICS_TABLESPARTITIONSPLACEMENT_POLICIESPROCESSLISTREFERENTIAL_CONSTRAINTSSCHEMATASEQUENCESSESSION_VARIABLESSLOW_QUERYSTATISTICSTABLESTABLE_CONSTRAINTSTABLE_STORAGE_STATSTIDB_HOT_REGIONSTIDB_HOT_REGIONS_HISTORYTIDB_INDEXESTIDB_SERVERS_INFOTIDB_TRXTIFLASH_REPLICATIKV_REGION_PEERSTIKV_REGION_STATUSTIKV_STORE_STATUSUSER_PRIVILEGESVARIABLES_INFOVIEWS

METRICS_SCHEMA

- UI

- TiDB Dashboard

- Overview

- Maintain

- Access

- Overview Page

- Cluster Info Page

- Top SQL Page

- Key Visualizer Page

- Metrics Relation Graph

- SQL Statements Analysis

- Slow Queries Page

- Cluster Diagnostics

- Monitoring Page

- Search Logs Page

- Instance Profiling

- Session Management and Configuration

- FAQ

- CLI

- Command Line Flags

- Configuration File Parameters

- System Variables

- Storage Engines

- Telemetry

- Errors Codes

- Table Filter

- Schedule Replicas by Topology Labels

- FAQs

- Release Notes

- All Releases

- Release Timeline

- TiDB Versioning

- TiDB Installation Packages

- v6.2

- v6.1

- v6.0

- v5.4

- v5.3

- v5.2

- v5.1

- v5.0

- v4.0

- v3.1

- v3.0

- v2.1

- v2.0

- v1.0

- Glossary

Troubleshoot Hotspot Issues

This document describes how to locate and resolve the problem of read and write hotspots.

As a distributed database, TiDB has a load balancing mechanism to distribute the application loads as evenly as possible to different computing or storage nodes, to make better use of server resources. However, in certain scenarios, some application loads cannot be well distributed, which can affect the performance and form a single point of high load, also known as a hotspot.

TiDB provides a complete solution to troubleshooting, resolving or avoiding hotspots. By balancing load hotspots, overall performance can be improved, including improving QPS and reducing latency.

Common hotspots

This section describes TiDB encoding rules, table hotspots, and index hotspots.

TiDB encoding rules

TiDB assigns a TableID to each table, an IndexID to each index, and a RowID to each row. By default, if the table uses an integer primary key, the value of the primary key is treated as the RowID. Among these IDs, TableID is unique in the entire cluster, while IndexID and RowID are unique in the table. The type of all these IDs is int64.

Each row of data is encoded as a key-value pair according to the following rule:

Key: tablePrefix{tableID}_recordPrefixSep{rowID}

Value: [col1, col2, col3, col4]

The tablePrefix and recordPrefixSep of the key are specific string constants, used to distinguish from other data in the KV space.

For Index data, the key-value pair is encoded according to the following rule:

Key: tablePrefix{tableID}_indexPrefixSep{indexID}_indexedColumnsValue

Value: rowID

Index data has two types: the unique index and the non-unique index.

For unique indexes, you can follow the coding rules above.

For non-unique indexes, a unique key cannot be constructed through this encoding, because the

tablePrefix{tableID}_indexPrefixSep{indexID}of the same index is the same and theColumnsValueof multiple rows might be the same. The encoding rule for non-unique indexes is as follows:Key: tablePrefix{tableID}_indexPrefixSep{indexID}_indexedColumnsValue_rowID Value: null

Table hotspots

According to TiDB coding rules, the data of the same table is in a range prefixed by the beginning of the TableID, and the data is arranged in the order of RowID values. When RowID values are incremented during table inserting, the inserted line can only be appended to the end. The Region will split after it reaches a certain size, and then it still can only be appended to the end of the range. The INSERT operation can only be executed on one Region, forming a hotspot.

The common auto-increment primary key is sequentially increasing. When the primary key is of the integer type, the value of the primary key is used as the RowID by default. At this time, the RowID is sequentially increasing, and a write hotspot of the table forms when a large number of INSERT operations exist.

Meanwhile, the RowID in TiDB is also sequentially auto-incremental by default. When the primary key is not an integer type, you might also encounter the problem of write hotspots.

In addition, when hotspots occur during the process of data writes (on a newly created table or partition) or data reads (periodic read hotspots in read-only scenarios), you can control the Region merge behavior using table attributes. For details, see Control the Region merge behavior using table attributes.

Index hotspots

Index hotspots are similar to table hotspots. Common index hotspots appear in fields that are monotonously increasing in time order, or INSERT scenarios with a large number of repeated values.

Identify hotspot issues

Performance problems are not necessarily caused by hotspots and might be caused by multiple factors. Before troubleshooting issues, confirm whether it is related to hotspots.

To judge write hotspots, open Hot Write in the TiKV-Trouble-Shooting monitoring panel to check whether the Raftstore CPU metric value of any TiKV node is significantly higher than that of other nodes.

To judge read hotspots, open Thread_CPU in the TiKV-Details monitoring panel to check whether the coprocessor CPU metric value of any TiKV node is particularly high.

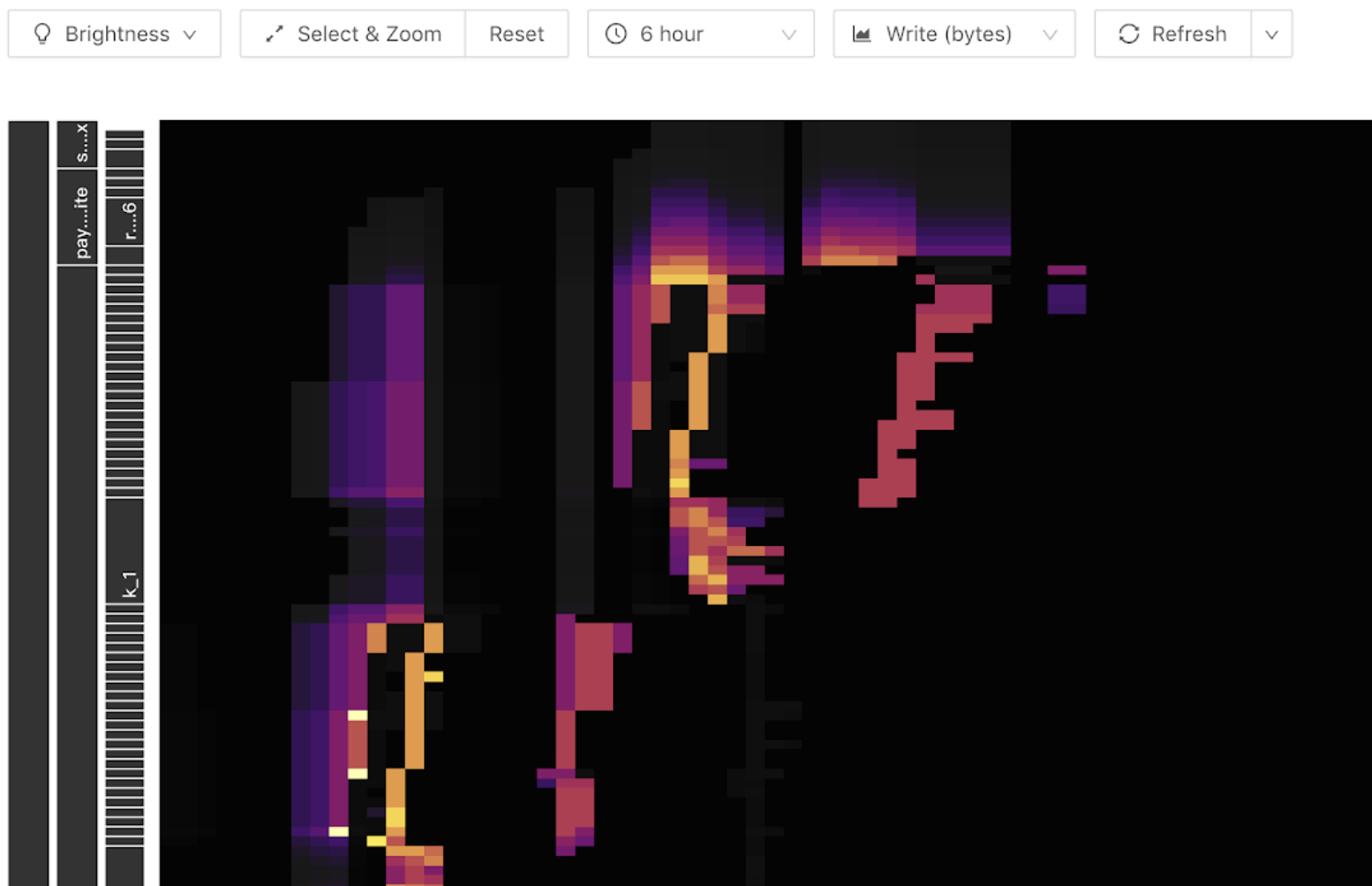

Use TiDB Dashboard to locate hotspot tables

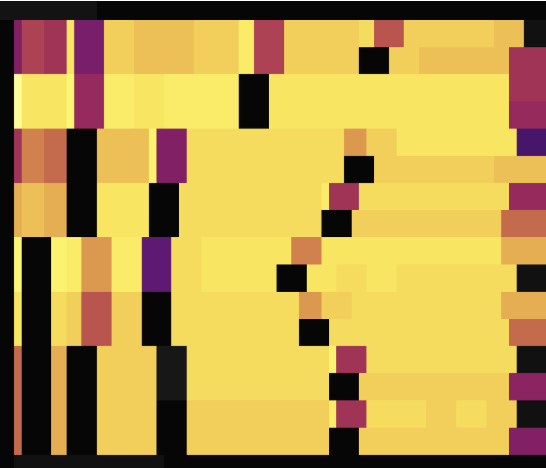

The Key Visualizer feature in TiDB Dashboard helps users narrow down hotspot troubleshooting scope to the table level. The following is an example of the thermal diagram shown by Key Visualizer. The horizontal axis of the graph is time, and the vertical axis are various tables and indexes. The brighter the color, the greater the load. You can switch the read or write flow in the toolbar.

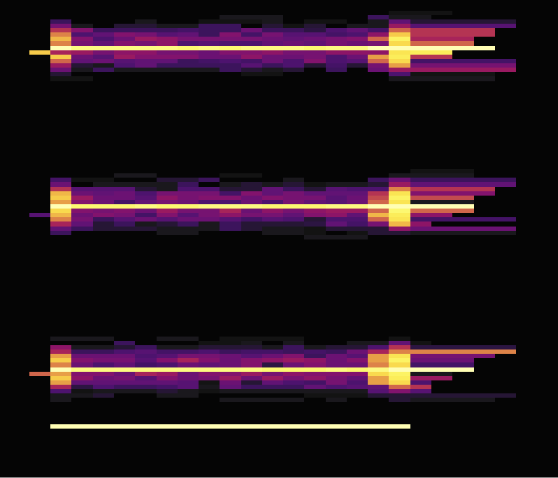

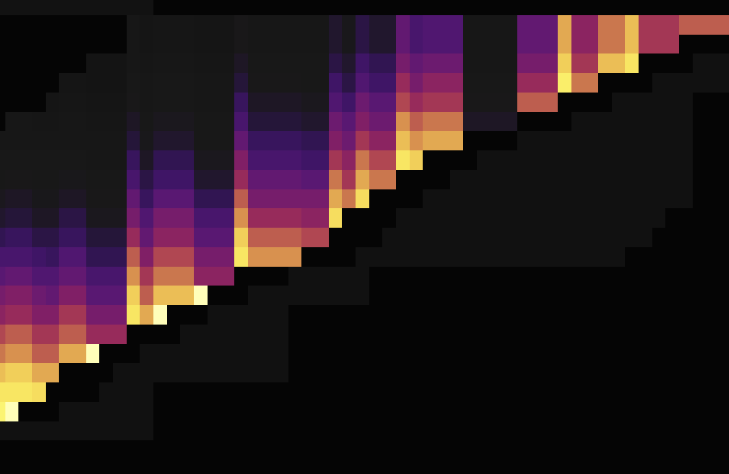

The following bright diagonal lines (oblique upward or downward) can appear in the write flow graph. Because the write only appears at the end, as the number of table Regions becomes larger, it appears as a ladder. This indicates that a write hotspot shows in this table:

For read hotspots, a bright horizontal line is generally shown in the thermal diagram. Usually these are caused by small tables with a large number of accesses, shown as follows:

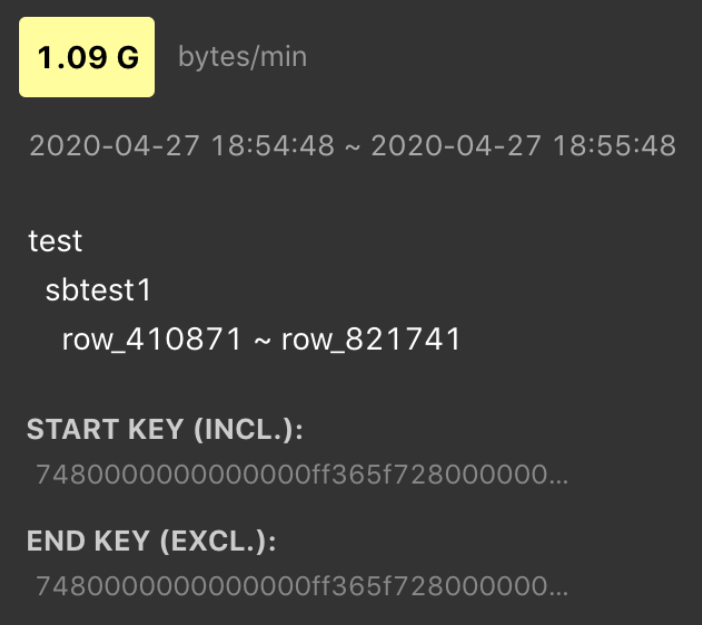

Hover over the bright block, you can see what table or index has a heavy load. For example:

Use SHARD_ROW_ID_BITS to process hotspots

For a non-integer primary key or a table without a primary key or a joint primary key, TiDB uses an implicit auto-increment RowID. When a large number of INSERT operations exist, the data is written into a single Region, resulting in a write hotspot.

By setting SHARD_ROW_ID_BITS, row IDs are scattered and written into multiple Regions, which can alleviate the write hotspot issue.

SHARD_ROW_ID_BITS = 4 # Represents 16 shards.

SHARD_ROW_ID_BITS = 6 # Represents 64 shards.

SHARD_ROW_ID_BITS = 0 # Represents the default 1 shard.

Statement example:

CREATE TABLE: CREATE TABLE t (c int) SHARD_ROW_ID_BITS = 4;

ALTER TABLE: ALTER TABLE t SHARD_ROW_ID_BITS = 4;

The value of SHARD_ROW_ID_BITS can be dynamically modified. The modified value only takes effect for newly written data.

For the table with a primary key of the CLUSTERED type, TiDB uses the primary key of the table as the RowID. At this time, the SHARD_ROW_ID_BITS option cannot be used because it changes the RowID generation rules. For the table with the primary key of the NONCLUSTERED type, TiDB uses an automatically allocated 64-bit integer as the RowID. In this case, you can use the SHARD_ROW_ID_BITS feature. For more details about the primary key of the CLUSTERED type, refer to clustered index.

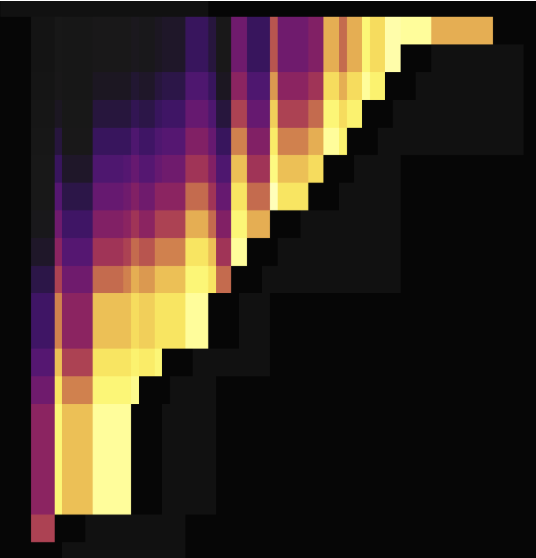

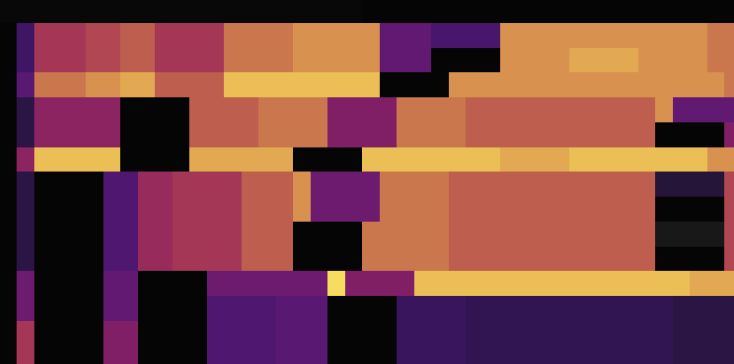

The following two load diagrams shows the case where two tables without primary keys use SHARD_ROW_ID_BITS to scatter hotspots. The first diagram shows the situation before scattering hotspots, while the second one shows the situation after scattering hotspots.

As shown in the load diagrams above, before setting SHARD_ROW_ID_BITS, load hotspots are concentrated on a single Region. After setting SHARD_ROW_ID_BITS, load hotspots become scattered.

Handle auto-increment primary key hotspot tables using AUTO_RANDOM

To resolve the write hotspots brought by auto-increment primary keys, use AUTO_RANDOM to handle hotspot tables that have auto-increment primary keys.

If this feature is enabled, TiDB generates randomly distributed and non-repeated (before the space is used up) primary keys to achieve the purpose of scattering write hotspots.

Note that the primary keys generated by TiDB are no longer auto-increment primary keys and you can use LAST_INSERT_ID() to obtain the primary key value assigned last time.

To use this feature, modify AUTO_INCREMENT to AUTO_RANDOM in the CREATE TABLE statement. This feature is suitable for non-application scenarios where the primary keys only need to guarantee uniqueness.

For example:

CREATE TABLE t (a BIGINT PRIMARY KEY AUTO_RANDOM, b varchar(255));

INSERT INTO t (b) VALUES ("foo");

SELECT * FROM t;

+------------+---+

| a | b |

+------------+---+

| 1073741825 | b |

+------------+---+

SELECT LAST_INSERT_ID();

+------------------+

| LAST_INSERT_ID() |

+------------------+

| 1073741825 |

+------------------+

The following two load diagrams shows the situations both before and after modifying AUTO_INCREMENT to AUTO_RANDOM to scatter hotspots. The first one uses AUTO_INCREMENT, while the second one uses AUTO_RANDOM.

As shown in the load diagrams above, using AUTO_RANDOM to replace AUTO_INCREMENT can well scatter hotspots.

For more details, see AUTO_RANDOM.

Optimization of small table hotspots

The Coprocessor Cache feature of TiDB supports pushing down computing result caches. After this feature is enabled, TiDB caches the computing results that will be pushed down to TiKV. This feature works well for read hotspots of small tables.

For more details, see Coprocessor Cache.

See also: