- Docs Home

- About TiDB

- Quick Start

- Develop

- Overview

- Quick Start

- Build a TiDB Cluster in TiDB Cloud (Developer Tier)

- CRUD SQL in TiDB

- Build a Simple CRUD App with TiDB

- Example Applications

- Connect to TiDB

- Design Database Schema

- Write Data

- Read Data

- Transaction

- Optimize

- Troubleshoot

- Reference

- Cloud Native Development Environment

- Third-party Support

- Deploy

- Software and Hardware Requirements

- Environment Configuration Checklist

- Plan Cluster Topology

- Install and Start

- Verify Cluster Status

- Test Cluster Performance

- Migrate

- Overview

- Migration Tools

- Migration Scenarios

- Migrate from Aurora

- Migrate MySQL of Small Datasets

- Migrate MySQL of Large Datasets

- Migrate and Merge MySQL Shards of Small Datasets

- Migrate and Merge MySQL Shards of Large Datasets

- Migrate from CSV Files

- Migrate from SQL Files

- Migrate from One TiDB Cluster to Another TiDB Cluster

- Migrate from TiDB to MySQL-compatible Databases

- Advanced Migration

- Integrate

- Overview

- Integration Scenarios

- Maintain

- Monitor and Alert

- Troubleshoot

- TiDB Troubleshooting Map

- Identify Slow Queries

- Analyze Slow Queries

- SQL Diagnostics

- Identify Expensive Queries Using Top SQL

- Identify Expensive Queries Using Logs

- Statement Summary Tables

- Troubleshoot Hotspot Issues

- Troubleshoot Increased Read and Write Latency

- Save and Restore the On-Site Information of a Cluster

- Troubleshoot Cluster Setup

- Troubleshoot High Disk I/O Usage

- Troubleshoot Lock Conflicts

- Troubleshoot TiFlash

- Troubleshoot Write Conflicts in Optimistic Transactions

- Troubleshoot Inconsistency Between Data and Indexes

- Performance Tuning

- Tuning Guide

- Configuration Tuning

- System Tuning

- Software Tuning

- SQL Tuning

- Overview

- Understanding the Query Execution Plan

- SQL Optimization Process

- Overview

- Logic Optimization

- Physical Optimization

- Prepare Execution Plan Cache

- Control Execution Plans

- Tutorials

- TiDB Tools

- Overview

- Use Cases

- Download

- TiUP

- Documentation Map

- Overview

- Terminology and Concepts

- Manage TiUP Components

- FAQ

- Troubleshooting Guide

- Command Reference

- Overview

- TiUP Commands

- TiUP Cluster Commands

- Overview

- tiup cluster audit

- tiup cluster check

- tiup cluster clean

- tiup cluster deploy

- tiup cluster destroy

- tiup cluster disable

- tiup cluster display

- tiup cluster edit-config

- tiup cluster enable

- tiup cluster help

- tiup cluster import

- tiup cluster list

- tiup cluster patch

- tiup cluster prune

- tiup cluster reload

- tiup cluster rename

- tiup cluster replay

- tiup cluster restart

- tiup cluster scale-in

- tiup cluster scale-out

- tiup cluster start

- tiup cluster stop

- tiup cluster template

- tiup cluster upgrade

- TiUP DM Commands

- Overview

- tiup dm audit

- tiup dm deploy

- tiup dm destroy

- tiup dm disable

- tiup dm display

- tiup dm edit-config

- tiup dm enable

- tiup dm help

- tiup dm import

- tiup dm list

- tiup dm patch

- tiup dm prune

- tiup dm reload

- tiup dm replay

- tiup dm restart

- tiup dm scale-in

- tiup dm scale-out

- tiup dm start

- tiup dm stop

- tiup dm template

- tiup dm upgrade

- TiDB Cluster Topology Reference

- DM Cluster Topology Reference

- Mirror Reference Guide

- TiUP Components

- PingCAP Clinic Diagnostic Service

- TiDB Operator

- Dumpling

- TiDB Lightning

- TiDB Data Migration

- About TiDB Data Migration

- Architecture

- Quick Start

- Deploy a DM cluster

- Tutorials

- Advanced Tutorials

- Maintain

- Cluster Upgrade

- Tools

- Performance Tuning

- Manage Data Sources

- Manage Tasks

- Export and Import Data Sources and Task Configurations of Clusters

- Handle Alerts

- Daily Check

- Reference

- Architecture

- Command Line

- Configuration Files

- OpenAPI

- Compatibility Catalog

- Secure

- Monitoring and Alerts

- Error Codes

- Glossary

- Example

- Troubleshoot

- Release Notes

- Backup & Restore (BR)

- Point-in-Time Recovery

- TiDB Binlog

- TiCDC

- Dumpling

- sync-diff-inspector

- TiSpark

- Reference

- Cluster Architecture

- Key Monitoring Metrics

- Secure

- Privileges

- SQL

- SQL Language Structure and Syntax

- SQL Statements

ADD COLUMNADD INDEXADMINADMIN CANCEL DDLADMIN CHECKSUM TABLEADMIN CHECK [TABLE|INDEX]ADMIN SHOW DDL [JOBS|QUERIES]ADMIN SHOW TELEMETRYALTER DATABASEALTER INDEXALTER INSTANCEALTER PLACEMENT POLICYALTER TABLEALTER TABLE COMPACTALTER TABLE SET TIFLASH MODEALTER USERANALYZE TABLEBACKUPBATCHBEGINCHANGE COLUMNCOMMITCHANGE DRAINERCHANGE PUMPCREATE [GLOBAL|SESSION] BINDINGCREATE DATABASECREATE INDEXCREATE PLACEMENT POLICYCREATE ROLECREATE SEQUENCECREATE TABLE LIKECREATE TABLECREATE USERCREATE VIEWDEALLOCATEDELETEDESCDESCRIBEDODROP [GLOBAL|SESSION] BINDINGDROP COLUMNDROP DATABASEDROP INDEXDROP PLACEMENT POLICYDROP ROLEDROP SEQUENCEDROP STATSDROP TABLEDROP USERDROP VIEWEXECUTEEXPLAIN ANALYZEEXPLAINFLASHBACK TABLEFLUSH PRIVILEGESFLUSH STATUSFLUSH TABLESGRANT <privileges>GRANT <role>INSERTKILL [TIDB]LOAD DATALOAD STATSMODIFY COLUMNPREPARERECOVER TABLERENAME INDEXRENAME TABLEREPLACERESTOREREVOKE <privileges>REVOKE <role>ROLLBACKSAVEPOINTSELECTSET DEFAULT ROLESET [NAMES|CHARACTER SET]SET PASSWORDSET ROLESET TRANSACTIONSET [GLOBAL|SESSION] <variable>SHOW ANALYZE STATUSSHOW [BACKUPS|RESTORES]SHOW [GLOBAL|SESSION] BINDINGSSHOW BUILTINSSHOW CHARACTER SETSHOW COLLATIONSHOW [FULL] COLUMNS FROMSHOW CONFIGSHOW CREATE PLACEMENT POLICYSHOW CREATE SEQUENCESHOW CREATE TABLESHOW CREATE USERSHOW DATABASESSHOW DRAINER STATUSSHOW ENGINESSHOW ERRORSSHOW [FULL] FIELDS FROMSHOW GRANTSSHOW INDEX [FROM|IN]SHOW INDEXES [FROM|IN]SHOW KEYS [FROM|IN]SHOW MASTER STATUSSHOW PLACEMENTSHOW PLACEMENT FORSHOW PLACEMENT LABELSSHOW PLUGINSSHOW PRIVILEGESSHOW [FULL] PROCESSSLISTSHOW PROFILESSHOW PUMP STATUSSHOW SCHEMASSHOW STATS_HEALTHYSHOW STATS_HISTOGRAMSSHOW STATS_METASHOW STATUSSHOW TABLE NEXT_ROW_IDSHOW TABLE REGIONSSHOW TABLE STATUSSHOW [FULL] TABLESSHOW [GLOBAL|SESSION] VARIABLESSHOW WARNINGSSHUTDOWNSPLIT REGIONSTART TRANSACTIONTABLETRACETRUNCATEUPDATEUSEWITH

- Data Types

- Functions and Operators

- Overview

- Type Conversion in Expression Evaluation

- Operators

- Control Flow Functions

- String Functions

- Numeric Functions and Operators

- Date and Time Functions

- Bit Functions and Operators

- Cast Functions and Operators

- Encryption and Compression Functions

- Locking Functions

- Information Functions

- JSON Functions

- Aggregate (GROUP BY) Functions

- Window Functions

- Miscellaneous Functions

- Precision Math

- Set Operations

- List of Expressions for Pushdown

- TiDB Specific Functions

- Clustered Indexes

- Constraints

- Generated Columns

- SQL Mode

- Table Attributes

- Transactions

- Garbage Collection (GC)

- Views

- Partitioning

- Temporary Tables

- Cached Tables

- Character Set and Collation

- Placement Rules in SQL

- System Tables

mysql- INFORMATION_SCHEMA

- Overview

ANALYZE_STATUSCLIENT_ERRORS_SUMMARY_BY_HOSTCLIENT_ERRORS_SUMMARY_BY_USERCLIENT_ERRORS_SUMMARY_GLOBALCHARACTER_SETSCLUSTER_CONFIGCLUSTER_HARDWARECLUSTER_INFOCLUSTER_LOADCLUSTER_LOGCLUSTER_SYSTEMINFOCOLLATIONSCOLLATION_CHARACTER_SET_APPLICABILITYCOLUMNSDATA_LOCK_WAITSDDL_JOBSDEADLOCKSENGINESINSPECTION_RESULTINSPECTION_RULESINSPECTION_SUMMARYKEY_COLUMN_USAGEMETRICS_SUMMARYMETRICS_TABLESPARTITIONSPLACEMENT_POLICIESPROCESSLISTREFERENTIAL_CONSTRAINTSSCHEMATASEQUENCESSESSION_VARIABLESSLOW_QUERYSTATISTICSTABLESTABLE_CONSTRAINTSTABLE_STORAGE_STATSTIDB_HOT_REGIONSTIDB_HOT_REGIONS_HISTORYTIDB_INDEXESTIDB_SERVERS_INFOTIDB_TRXTIFLASH_REPLICATIKV_REGION_PEERSTIKV_REGION_STATUSTIKV_STORE_STATUSUSER_PRIVILEGESVARIABLES_INFOVIEWS

METRICS_SCHEMA

- UI

- TiDB Dashboard

- Overview

- Maintain

- Access

- Overview Page

- Cluster Info Page

- Top SQL Page

- Key Visualizer Page

- Metrics Relation Graph

- SQL Statements Analysis

- Slow Queries Page

- Cluster Diagnostics

- Monitoring Page

- Search Logs Page

- Instance Profiling

- Session Management and Configuration

- FAQ

- CLI

- Command Line Flags

- Configuration File Parameters

- System Variables

- Storage Engines

- Telemetry

- Errors Codes

- Table Filter

- Schedule Replicas by Topology Labels

- FAQs

- Release Notes

- All Releases

- Release Timeline

- TiDB Versioning

- TiDB Installation Packages

- v6.2

- v6.1

- v6.0

- v5.4

- v5.3

- v5.2

- v5.1

- v5.0

- v4.0

- v3.1

- v3.0

- v2.1

- v2.0

- v1.0

- Glossary

Integrate TiDB with ProxySQL

This document describes how to integrate TiDB with ProxySQL using CentOS 7 as an example. If you want to integrate using other systems, refer to the Try Out section, which introduces how to deploy a test integration environment using Docker and Docker Compose. For more information, refer to:

1. Start TiDB

Test environment

- TiDB Cloud

- Source compilation

- TiUP

You can refer to Build a TiDB cluster in TiDB Cloud (Developer Tier).

Download the TiDB source code, change to the

tidb-serverfolder and run thego buildcommand.git clone git@github.com:pingcap/tidb.git cd tidb/tidb-server go buildUse the configuration file

tidb-config.tomlto start TiDB. The command is as follows:${TIDB_SERVER_PATH} -config ./tidb-config.toml -store unistore -path "" -lease 0s > ${LOCAL_TIDB_LOG} 2>&1 &Note- The preceding command uses

unistoreas the storage engine, which is a test storage engine in TiDB. Make sure that you use it in a test environment only. TIDB_SERVER_PATH: the path of the compiled binary usinggo build. For example, if you execute the previous command under/usr/local,TIDB_SERVER_PATHis/usr/local/tidb/tidb-server/tidb-server.LOCAL_TIDB_LOG: the log file path of TiDB.

- The preceding command uses

TiUP, as the TiDB package manager, makes it easier to manage different cluster components in the TiDB ecosystem, such as TiDB, PD, and TiKV.

Install TiUP:

curl --proto '=https' --tlsv1.2 -sSf https://tiup-mirrors.pingcap.com/install.sh | shStart TiDB in a test environment:

tiup playground

Production environment

- TiDB Cloud

- Deploy Locally

It is recommended to use TiDB Cloud directly when you need hosting TiDB services (for example, you cannot manage it yourself, or you need a cloud-native environment). To build a TiDB cluster in a production environment, refer to Create a TiDB cluster.

The production environment requires more steps than the test environment. To deploy an on-premises production cluster, it is recommended to refer to Deploy a TiDB cluster using TiUP and then deploy it based on hardware conditions.

2. Start ProxySQL

Install ProxySQL by yum

Add the ProxySQL repository:

cat > /etc/yum.repos.d/proxysql.repo << EOF [proxysql] name=ProxySQL YUM repository baseurl=https://repo.proxysql.com/ProxySQL/proxysql-2.4.x/centos/\$releasever gpgcheck=1 gpgkey=https://repo.proxysql.com/ProxySQL/proxysql-2.4.x/repo_pub_key EOFInstall ProxySQL:

yum install proxysqlStart ProxySQL:

systemctl start proxysql

Other installation ways

To install ProxySQL using other ways, refer to the ProxySQL README or the ProxySQL installation documentation.

3. Configure ProxySQL

To use ProxySQL as a proxy for TiDB, you need to configure ProxySQL. The required configuration items are listed in the following sections. For more details about other configuration items, refer to the ProxySQL official documentation.

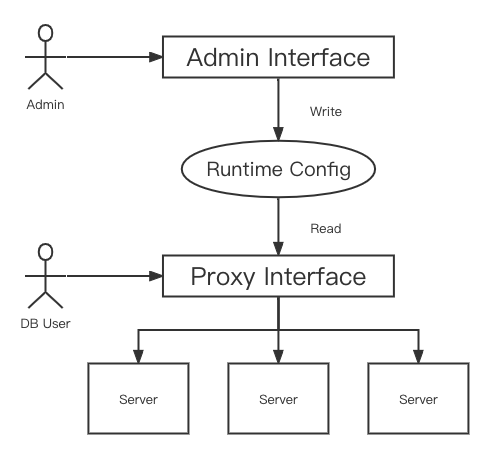

Simple introduction

ProxySQL uses a port to manage configuration, which is ProxySQL Admin interface, and a port to proxy, which is ProxySQL MySQL Interface.

- ProxySQL Admin interface: To connect to the admin interface, you can use an

adminuser to read and write configuration, or use astatsuser to read part of statistics (cannot read or write configuration). The default credentials areadmin:adminandstats:stats. For security reasons, you can use the default credentials to connect locally, but to connect remotely, you need to configure a new user, which is often namedradmin. - ProxySQL MySQL Interface: Used as a proxy to forward SQL to the configured service.

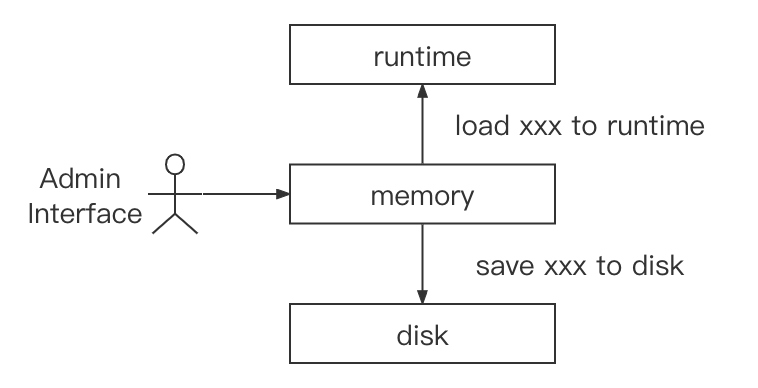

There are three layers in ProxySQL configurations: runtime, memory, and disk. You can change the configuration of the memory layer only. After modifying the configuration, you can use LOAD xxx TO runtime to make the configuration effective, and/or you can use SAVE xxx TO DISK to save to the disk to prevent configuration loss.

Configure TiDB server

You can add multiple TiDB servers in ProxySQL. To add TiDB servers, perform the following at ProxySQL Admin interface:

INSERT INTO mysql_servers(hostgroup_id, hostname, port) VALUES (0, '127.0.0.1', 4000);

LOAD mysql servers TO runtime;

SAVE mysql servers TO DISK;

Field description:

hostgroup_id: ProxySQL manages servers by hostgroup. To distribute SQL to these servers evenly, you can configure several servers that need load balancing to the same hostgroup. To distinguish the servers, such as read and write splitting, you can configure them to different hostgroup.hostname: The IP or domain of the TiDB server.port: The port of the TiDB server.

Configure Proxy login users

After adding a TiDB server user to ProxySQL, ProxySQL allows this user to log in ProxySQL MySQL Interface and create a connection with TiDB. Make sure that the user has appropriate permissions in TiDB. To add a TiDB server user, perform the following at ProxySQL Admin interface:

INSERT INTO mysql_users(username, password, active, default_hostgroup, transaction_persistent) VALUES ('root', '', 1, 0, 1);

LOAD mysql users TO runtime;

SAVE mysql users TO DISK;

Field description:

username: The user name.password: The password.active: Controls whether the user is active.1is active, and0is inactive. Only when theactiveis1, the user can log in.default_hostgroup: The default hostgroup used by the user, where SQL distributed to unless the query rule routes the traffic to a specific hostgroup.transaction_persistent:1indicates persistent transaction. That is, when the user starts a transaction in a connection, all statements are routed to the same hostgroup until the transaction is committed or rolled back.

Configure ProxySQL by a configuration file

In addition to configuring using ProxySQL Admin interface, you can also configure ProxySQL using a configuration file. In the Configuring ProxySQL through the config file document, the configuration file should only be considered as a secondary way of initializing ProxySQL, not the primary way. The configuration file is only used when the SQLite is not created and will not be used after the SQLite is created. When using the configuration file to configure ProxySQL, you should delete SQLite first using the following command. But this loses configuration changes in ProxySQL Admin interface.

rm /var/lib/proxysql/proxysql.db

Alternatively, you can execute the LOAD xxx FROM CONFIG command to overwrite the current configuration.

The path of the configuration file is /etc/proxysql.cnf. To configure required configuration items in the preceding sections with the configuration file, the following takes mysql_servers and mysql_users as an example. To modify other items, refer to the /etc/proxysql.cnf.

mysql_servers =

(

{

address="127.0.0.1"

port=4000

hostgroup=0

max_connections=2000

}

)

mysql_users:

(

{

username = "root"

password = ""

default_hostgroup = 0

max_connections = 1000

default_schema = "test"

active = 1

transaction_persistent = 1

}

)

To make the preceding modifications effective, use the systemctl restart proxysql command to restart ProxySQL. Then the SQLite database will be created automatically and the configuration file will not be ignored.

Other configuration items

The preceding configuration items are required. For optional configuration items, refer to Global variables.

4. Try out

To quick start the test environment, you can use Docker and Docker Compose. Make sure the ports 4000 and 6033 are not allocated.

git clone https://github.com/Icemap/tidb-proxysql-integration-test.git

cd tidb-proxysql-integration-test && docker-compose pull # Get the latest Docker images

sudo setenforce 0 # Only on Linux

docker-compose up -d

DO NOT use the preceding commands to create an integration in production environments.

The preceding commands start an environment integrated TiDB with ProxySQL and runs two containers. To log in to the ProxySQL 6033 port, you can use the root username with an empty password. For more information about the configuration of containers, see docker-compose.yaml. For more details about the configuration of ProxySQL, see proxysql-docker.cnf.

To connect to TiDB, run the following command:

mysql -u root -h 127.0.0.1 -P 6033 -e "SELECT VERSION()"

An example result is as follows:

+--------------------+

| VERSION() |

+--------------------+

| 5.7.25-TiDB-v6.1.0 |

+--------------------+

5. Configuration examples

Dependencies:

- Docker

- Docker Compose

- MySQL Client

Clone the example code repository and change to the sample directory:

git clone https://github.com/Icemap/tidb-proxysql-integration-test.git

cd tidb-proxysql-integration-test

The following sections use tidb-proxysql-integration-test as the root directory.

Use Admin Interface to configure load balancing

Change to the sample directory:

cd example/load-balance-admin-interface

Run with a script

To configure load balancing using ProxySQL Admin Interface, you can run with the test-load-balance.sh script using the following command:

./test-load-balance.sh

Run step by step

The preceding test-load-balance.sh script can be run step by step as follows:

Start three TiDB containers and a ProxySQL instance.

docker-compose up -d- Start three TiDB containers using

docker-compose. All the ports in the container are4000and host ports are4001,4002and4003. - After starting TiDB containers, the ProxySQL instance is started. The port of ProxySQL MySQL Interface in container is

6033and the host port is6034. - The port of ProxySQL Admin Interface is not exposed because it can only be accessed in the container.

- For more details about the process, refer to

docker-compose.yaml.

- Start three TiDB containers using

In the three TiDB containers, create the same table schema with different data (

'tidb-0','tidb-1'and'tidb-2') to distinguish TiDB instances.mysql -u root -h 127.0.0.1 -P 4001 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-0'); EOF mysql -u root -h 127.0.0.1 -P 4002 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-1'); EOF mysql -u root -h 127.0.0.1 -P 4003 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-2'); EOFTo execute the

proxysql-prepare.sqlin ProxySQL Admin Interface, execute thedocker-compose execcommand as follows:docker-compose exec proxysql sh -c "mysql -uadmin -padmin -h127.0.0.1 -P6032 < ./proxysql-prepare.sql"The preceding SQL file runs and triggers the following operations:

- Adds hosts of three TiDB Servers and set all

hostgroup_idas0. - Makes the configuration of TiDb Servers effective and saves it on disk.

- Adds a

rootuser with an empty password and setsdefault_hostgroupas0, corresponding to the precedinghostgroup_idof TiDB Servers. - Makes the configuration of the user effective and saves it on disk.

- Adds hosts of three TiDB Servers and set all

Log in to ProxySQL MySQL Interface with the

rootuser and query 5 times using the following statements. The expected output contains'tidb-0','tidb-1', and'tidb-2'three different values.mysql -u root -h 127.0.0.1 -P 6034 -t << EOF SELECT * FROM test.test; SELECT * FROM test.test; SELECT * FROM test.test; SELECT * FROM test.test; SELECT * FROM test.test; EOFTo stop and remove containers and networks, you can use the following command:

docker-compose down

Expected output

There are three different results ('tidb-0', 'tidb-1', and 'tidb-2') in the expected output, but the exact order cannot be expected. The following is one of the expected outputs:

# ./test-load-balance.sh

Creating network "load-balance-admin-interface_default" with the default driver

Creating load-balance-admin-interface_tidb-1_1 ... done

Creating load-balance-admin-interface_tidb-2_1 ... done

Creating load-balance-admin-interface_tidb-0_1 ... done

Creating load-balance-admin-interface_proxysql_1 ... done

+--------+

| db |

+--------+

| tidb-2 |

+--------+

+--------+

| db |

+--------+

| tidb-0 |

+--------+

+--------+

| db |

+--------+

| tidb-1 |

+--------+

+--------+

| db |

+--------+

| tidb-1 |

+--------+

+--------+

| db |

+--------+

| tidb-1 |

+--------+

Stopping load-balance-admin-interface_proxysql_1 ... done

Stopping load-balance-admin-interface_tidb-0_1 ... done

Stopping load-balance-admin-interface_tidb-2_1 ... done

Stopping load-balance-admin-interface_tidb-1_1 ... done

Removing load-balance-admin-interface_proxysql_1 ... done

Removing load-balance-admin-interface_tidb-0_1 ... done

Removing load-balance-admin-interface_tidb-2_1 ... done

Removing load-balance-admin-interface_tidb-1_1 ... done

Removing network load-balance-admin-interface_default

Use Admin Interface to configure user split

Change to the sample directory:

cd example/user-split-admin-interface

Run with a script

To configure a user split traffic using ProxySQL Admin Interface, you can run the test-user-split.sh script using the following command:

./test-user-split.sh

Run step by step

The preceding test-user-split.sh script can be run step by step as follows:

Start two TiDB containers and a ProxySQL instance.

docker-compose up -d- Start two TiDB containers using

docker-compose. All the ports in the container are4000and host ports are4001and4002. - After you start TiDB containers, the ProxySQL instance is started. The port of ProxySQL MySQL Interface in the container is

6033and the host port is6034. - The port of ProxySQL Admin Interface is not exposed because it can only be accessed in the container.

- For more details about the process, refer to

docker-compose.yaml.

- Start two TiDB containers using

In the two TiDB containers, create the same table schema with different data (

'tidb-0'and'tidb-1') to distinguish TiDB instances.mysql -u root -h 127.0.0.1 -P 4001 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-0'); EOF mysql -u root -h 127.0.0.1 -P 4002 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-1'); EOFCreate a new user for ProxySQL in the

tidb-1instance:mysql -u root -h 127.0.0.1 -P 4002 << EOF CREATE USER 'root1' IDENTIFIED BY ''; GRANT ALL PRIVILEGES ON *.* TO 'root1'@'%'; FLUSH PRIVILEGES; EOFTo execute the

proxysql-prepare.sqlin ProxySQL Admin Interface, execute thedocker-compose execcommand as follows:docker-compose exec proxysql sh -c "mysql -uadmin -padmin -h127.0.0.1 -P6032 < ./proxysql-prepare.sql"The preceding SQL file runs and triggers the following operations:

- Adds hosts of two TiDB Servers. The

hostgroup_idoftidb-0is0andhostgroup_idoftidb-1is1. - Makes the configuration of TiDb Servers effective and saves it on disk.

- Adds a

rootuser with an empty password and setsdefault_hostgroupas0. It indicates that the SQL routes totidb-0by default. - Adds a user

root1with an empty password and setsdefault_hostgroupas1. It indicates that the SQL routes totidb-1by default. - Makes the configuration of the user effective and saves it on disk.

- Adds hosts of two TiDB Servers. The

Log in to ProxySQL MySQL Interface with the

rootuser androot1user. The expected output contains'tidb-0'and'tidb-1'two different values.mysql -u root -h 127.0.0.1 -P 6034 -e "SELECT * FROM test.test;" mysql -u root1 -h 127.0.0.1 -P 6034 -e "SELECT * FROM test.test;"To stop and remove containers and networks, you can use the following command:

docker-compose down

Expected output

The following is one of the expected outputs:

# ./test-user-split.sh

Creating network "user-split-admin-interface_default" with the default driver

Creating user-split-admin-interface_tidb-1_1 ... done

Creating user-split-admin-interface_tidb-0_1 ... done

Creating user-split-admin-interface_proxysql_1 ... done

+--------+

| db |

+--------+

| tidb-0 |

+--------+

+--------+

| db |

+--------+

| tidb-1 |

+--------+

Stopping user-split-admin-interface_proxysql_1 ... done

Stopping user-split-admin-interface_tidb-0_1 ... done

Stopping user-split-admin-interface_tidb-1_1 ... done

Removing user-split-admin-interface_proxysql_1 ... done

Removing user-split-admin-interface_tidb-0_1 ... done

Removing user-split-admin-interface_tidb-1_1 ... done

Removing network user-split-admin-interface_default

Use Admin Interface to configure proxy rules

Change to the sample directory:

cd example/proxy-rule-admin-interface

Run with script

To configure proxy rules to use different TiDB servers for executing read and write SQLs (if not matched, use default_hostgroup) using ProxySQL Admin Interface, you can run proxy-rule-split.sh using the following command:

./proxy-rule-split.sh

Run step by step

The preceding proxy-rule-split.sh script can be run step by step as follows:

Start two TiDB containers and a ProxySQL instance.

docker-compose up -d- Start two TiDB containers using

docker-compose. All the ports in the container are4000and host ports are4001and4002. - After you start TiDB containers, the ProxySQL instance is started. The port of ProxySQL MySQL Interface in the container is

6033and the host port is6034. - The port of ProxySQL Admin Interface is not exposed because it can only be accessed in the container.

- For more details about the process, refer to

docker-compose.yaml

- Start two TiDB containers using

In the two TiDB containers, create the same table schema with different data (

'tidb-0'and'tidb-1') to distinguish TiDB instances.mysql -u root -h 127.0.0.1 -P 4001 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-0'); EOF mysql -u root -h 127.0.0.1 -P 4002 << EOF DROP TABLE IF EXISTS test.test; CREATE TABLE test.test (db VARCHAR(255)); INSERT INTO test.test (db) VALUES ('tidb-1'); EOFTo execute the

proxysql-prepare.sqlin ProxySQL Admin Interface, execute thedocker-compose execcommand as follows:docker-compose exec proxysql sh -c "mysql -uadmin -padmin -h127.0.0.1 -P6032 < ./proxysql-prepare.sql"The preceding SQL file runs and triggers the following operations:

- Adds hosts of two TiDB Servers. The

hostgroup_idoftidb-0is0andhostgroup_idoftidb-1is1. - Makes the configuration of TiDB Servers effective and saves it on disk.

- Adds a user

rootwith an empty password and setsdefault_hostgroupas0. It indicates that the SQL routes totidb-0by default. - Makes the configuration of the user effective and save it on disk.

- Adds the rule

^SELECT.*FOR UPDATE$withrule_idas1anddestination_hostgroupas0. If a SQL statement match this rule, it used the TiDB Server withhostgroupas0(this rule forwardsSELECT ... FOR UPDATEto the written database). - Adds the rule

^SELECTwithrule_idas2anddestination_hostgroupas1. If SQL statements match this rule, it uses the TiDB Server withhostgroupas1. - Makes the configuration of the rule effective and saves it on disk.

NoteMore details about the matching rules:

ProxySQL tries to match the rules one by one in the order of

rule_idfrom smallest to largest.^matches the beginning of a SQL statement and$matches the end.match_digestmatches the parameterized SQL statement. For more details, see query_processor_regex.Important parameters:

digest: match the parameterized Hash value.match_pattern: match the raw SQL statements.negate_match_pattern: if you set the value to1, inverse the match formatch_digestormatch_pattern.log: whether to log the query.replace_pattern: if it is not empty, this is the pattern with which to replace the matched pattern.

For full parameters, see mysql_query_rules.

- Adds hosts of two TiDB Servers. The

Log in to ProxySQL MySQL Interface with the

rootuser:mysql -u root -h 127.0.0.1 -P 6034You can run the following statements:

SELECTstatement:SELECT * FROM test.test;The statement is expected to match rules with

rule_idof2and forward the statement to the TiDB servertidb-1withhostgroupof1.SELECT ... FOR UPDATEstatement:SELECT * FROM test.test for UPDATE;The statement is expected to match rules with

rule_idof1and forward the statement to the TiDB servertidb-0withhostgroupof0.Transaction:

BEGIN; INSERT INTO test.test (db) VALUES ('insert this and rollback later'); SELECT * FROM test.test; ROLLBACK;The

BEGINstatement is expected to not match all rules. It uses thedefault_hostgroupof the user (It is0) and thus forwards to the TiDB servertidb-0(hostgroupis0). And ProxySQL enables usertransaction_persistentby default, which will cause all statements within the same transaction to run in the samehostgroup. So theINSERTstatement andSELECT * FROM test.test;will also be forwarded to the TiDB Servertidb-0(hostgroupis0).

To stop and remove containers and networks, you can use the following command:

docker-compose down

Expected output

# ./proxy-rule-split.sh

Creating network "proxy-rule-admin-interface_default" with the default driver

Creating proxy-rule-admin-interface_tidb-1_1 ... done

Creating proxy-rule-admin-interface_tidb-0_1 ... done

Creating proxy-rule-admin-interface_proxysql_1 ... done

+--------+

| db |

+--------+

| tidb-1 |

+--------+

+--------+

| db |

+--------+

| tidb-0 |

+--------+

+--------------------------------+

| db |

+--------------------------------+

| tidb-0 |

| insert this and rollback later |

+--------------------------------+

Stopping proxy-rule-admin-interface_proxysql_1 ... done

Stopping proxy-rule-admin-interface_tidb-0_1 ... done

Stopping proxy-rule-admin-interface_tidb-1_1 ... done

Removing proxy-rule-admin-interface_proxysql_1 ... done

Removing proxy-rule-admin-interface_tidb-0_1 ... done

Removing proxy-rule-admin-interface_tidb-1_1 ... done

Removing network proxy-rule-admin-interface_default

Use the configuration file to configure load balancing

To configure load balancing using the configuration file, you can run test-load-balance.sh using the following command:

cd example/load-balance-config-file

./test-load-balance.sh

The expected output is the same as that of Use Admin Interface to configure load balancing. The only change is using the configuration file to initialize the ProxySQL configuration.

The configuration of ProxySQL is stored in SQLite. The configuration file is only used when the SQLite is not created.

It is recommended that you use the configuration file only for initialization but not for modifying configuration items, because configuration through the ProxySQL Admin Interface supports the following features:

- Input validation.

- Remote configuration by any MySQL client.

- Runtime configuration for maximum uptime (no need to restart).

- Propagation the configuration to other ProxySQL nodes if ProxySQL Cluster is configured.